Allconnect Marketplace Redesign

Incorporating iterative small-scale user testing to save time and resources without compromising on significant gains in key product KPIs.

Timeline

4 weeks

Methods

Concept testing, survey, usability testing

Context

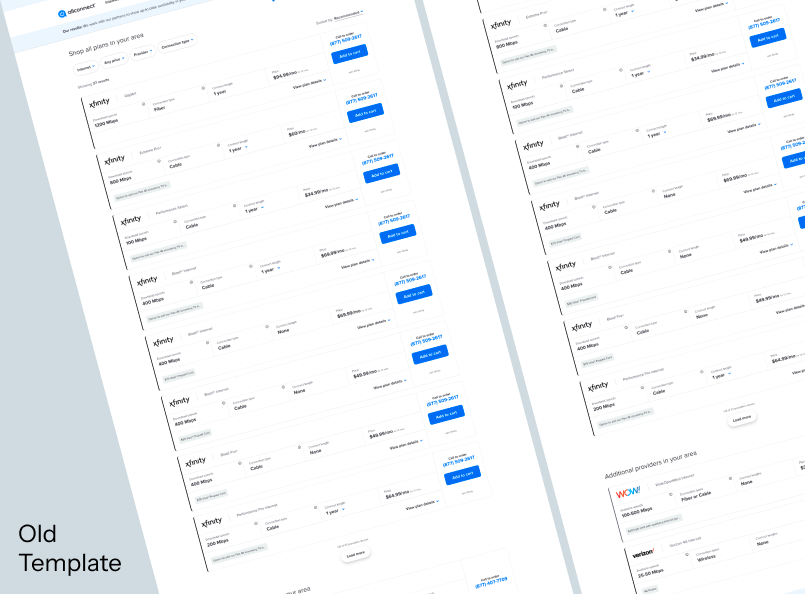

Allconnect was a startup with one goal: to provide a user-friendly comparative marketplace experience for customers seeking to buy new broadband internet and TV services. To reach that goal, the product and design team set their sights on improving the “results application,” the primary component on the website where users can enter their address and compare internet services that were available to them.

This project was immense in scale, with large egos to boot. Product wanted to test fast and dirty, pushing for design and development teams to push out new designs for live A/B testing on the website regardless of how confident anyone was in them. Meanwhile, there was decision paralysis on every aspect of the design due to the lack of data to back any assumptions. In an effort to avoid losing any more time or resources to these debates, I proposed that the UX and design team will use small scale user testing to narrow down our options before committing development resources and 2+ weeks to A/B testing.

By incorporating user research into the experimentation process, we were able to save countless hours of design and development time and were able to focus all of our energy refining a data-backed design that resulted in a 40% increase in conversion rate and $22k increase in monthly profit.

Without a doubt, Aileen is the glue that holds so many projects together. She is the backbone of all [Allconnect] projects. She is constantly challenging previous assumptions we've made on site to continuously push for the best product experience.

Director of Design

Methodology

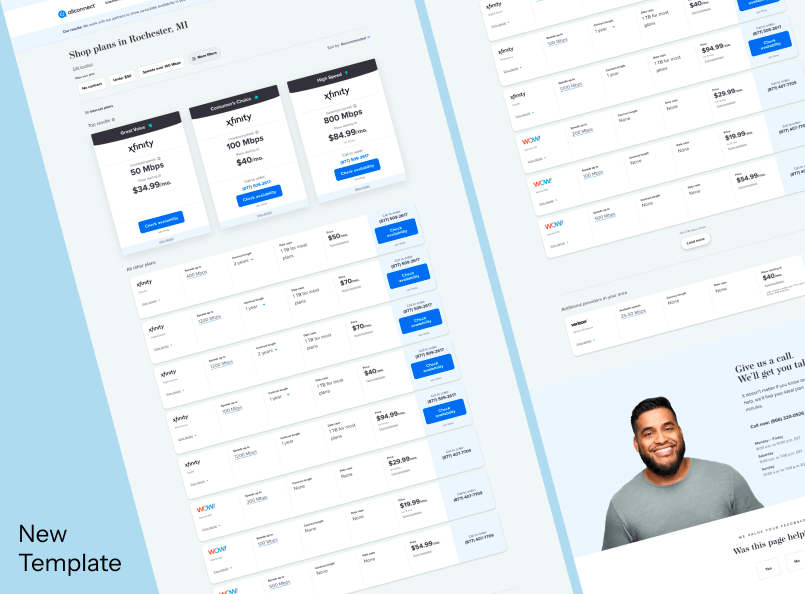

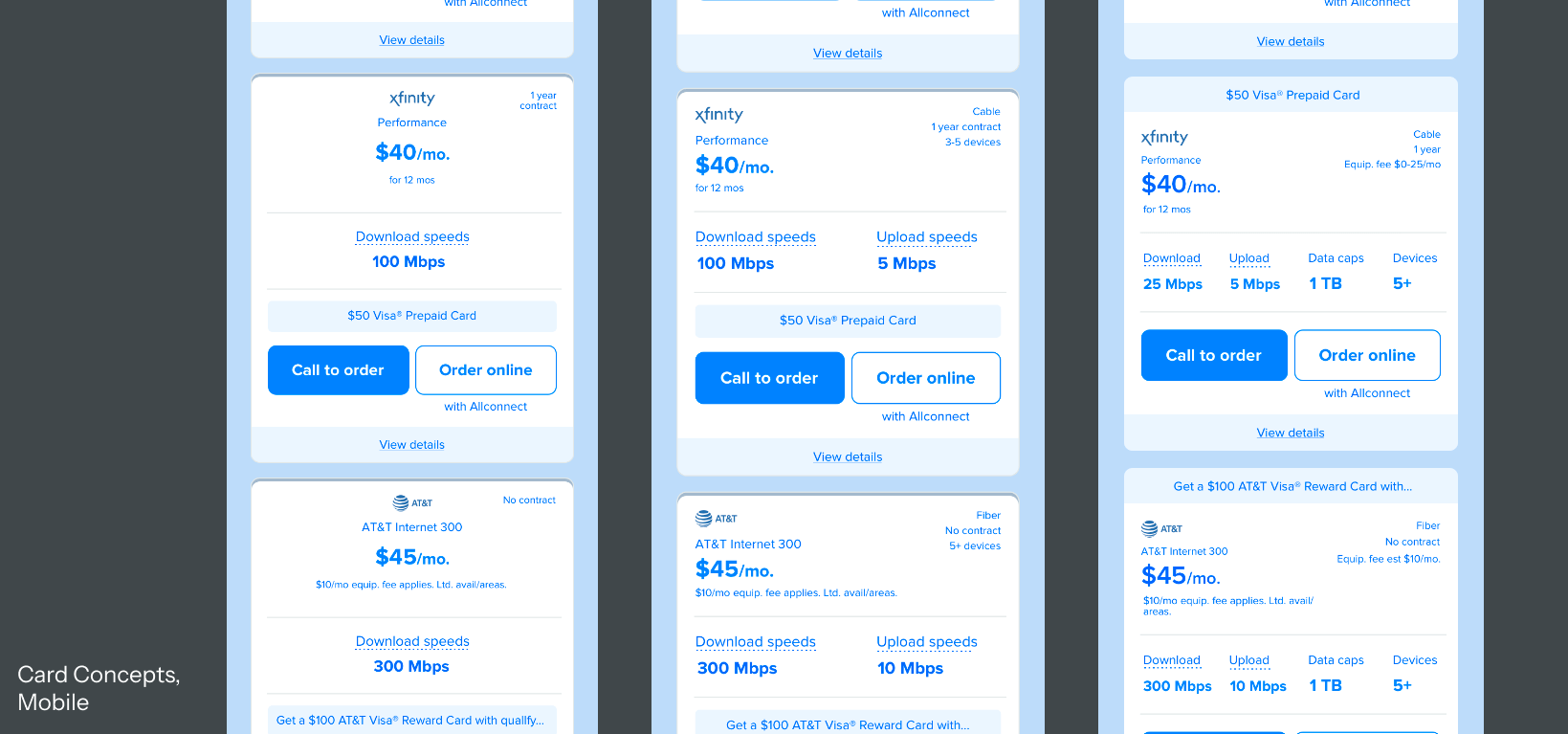

I broke this project down into two steps: testing the product cards and testing the cards in context to the entire page or application. Since the cards were the foundation of the entire experience, I employed two methodologies to gather enough data, unmoderated concept testing and a preference survey, that would allow us to confidently narrow down four desktop designs and three mobile designs into one of each.

For the unmoderated test, I recruited 18 participants through UserTesting to complete a series of tasks using one random design, then showed them the remaining designs to provide additional comparative feedback. This allowed me to see how the cards performed individually and gather preferential feedback in relation to the other designs for ranking purposes. The survey was much simpler, with 76 participants ranking each design based on ease of use and level of information to compare internet plans with.

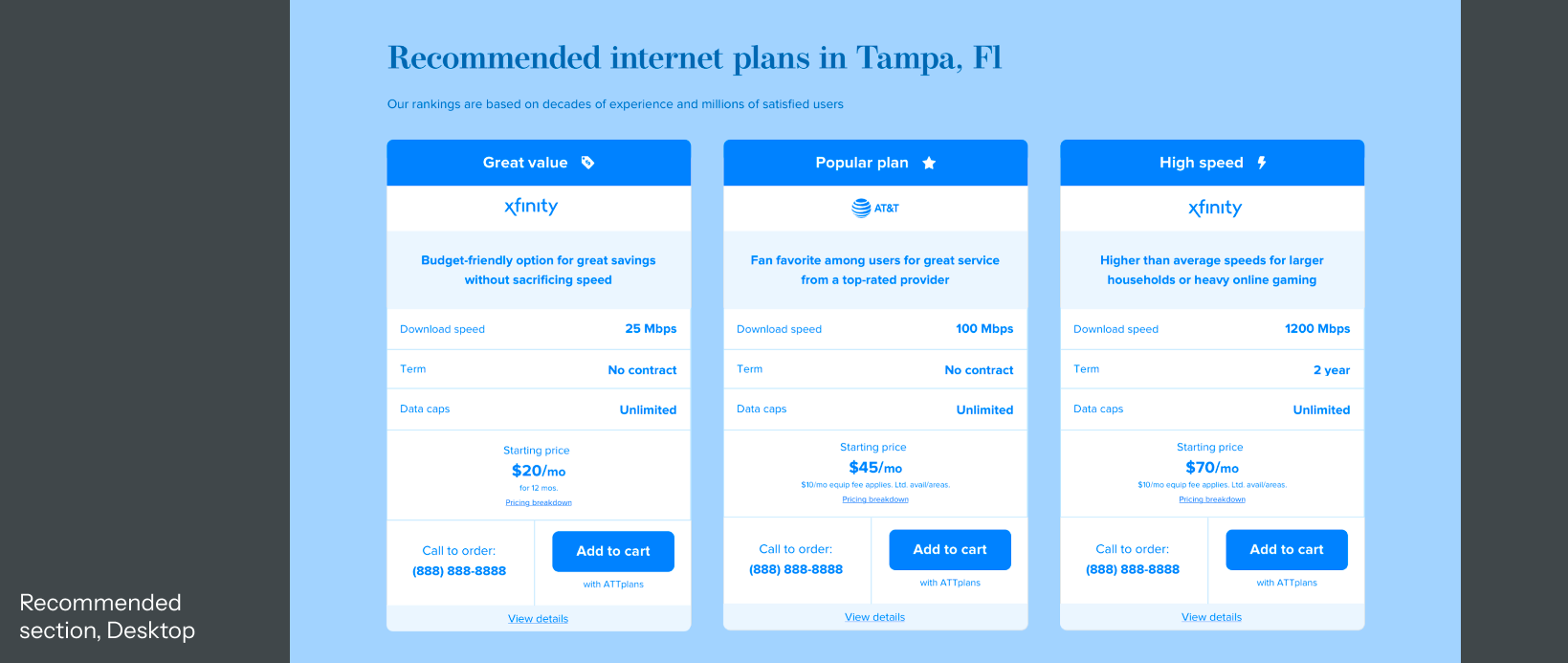

Findings from these studies allowed us to narrow down our product card design options to the one with the best balance between level of information to best compare products and simplicity to avoid overwhelming the user. With the cards selected, we moved on to usability testing the same design in context of a full results application page along with a new editorialized “recommended for you” section.

Ten participants were recruited through UserTesting and asked to perform tasks around identifying and comparing internet plans on mobile or desktop devices, then asked follow-up questions to gather specific feedback on the overall layout, ease of use, and level of information provided. While feedback about the cards remained relatively the same, we were able to catch several issues with the newly added section before implementation, saving us several weeks’ worth of development and A/B testing time.

After a few design tweaks, the product team launched our winning design as an A/B test against our control experience. Once enough sessions were recorded for statistical significance a month later, it was clear that the new design was our champion, with significant conversion and profit gains across all devices and audiences. With this result, not only were we able to prove that small-scale testing can iteratively get us to the best result, we also proved that the UX and design team are reliable partners to our product team and helping them achieve the KPI goals they were looking for.